Teach a Bot to Fish or Give a Bot a Fish? Integrate ChatGPT the Right Way

What Do Fish Have to Do with Models Like GPT-4? 🤖 + 🐠 = ✨

The phrases "Give a Bot a Fish" and "Teach a Bot to Fish" come from an excellent prompt engineering guide from Brex.

These phrases are ways of describing how Large Language Models (LLMs) can understand and interact with the outside world.

The source code from the examples in this post can be found on my GitHub: https://github.com/Brandoko/gpt-fish

Give a Bot a Fish 🐠

This strategy is the less complex of the two and can be implemented quite easily on your own. When we give a bot a fish, it means we are providing the model with everything it needs to succeed in its given task.

Now, let's explore this concept with a simple Python example.

I use poetry to simplify the creation and dependency management of my Python projects.

Install poetry https://python-poetry.org/docs/

Create a new poetry project

poetry new gpt-fishEnter the newly created repo

cd gpt-fishInstall the OpenAI Python package

poetry add openaiInstall python-dotenv to read our OpenAI API key from an .env file:

poetry add python-dotenvCreate a new file for our example:

touch gpt_fish/give-a-bot-a-fish-simple.pyCreate a .env file with

OPENAI_API_KEY=<your openai api key here>

import openai

import openai

from dotenv import load_dotenv

import os

load_dotenv()

openai.api_key = os.environ["OPENAI_API_KEY"]

# Define a system prompt that gives the assistant its purpose and context.

system_prompt = """You are a bubbly assistant that helps a user plan their day.

Today is Sun Jun 25 2023. This is the user's list of things that need to be done today.

| Activity | Duration of activity |

|------------------------------|----------------------|

| Going fishing | 1h |

| Cleaning the house | 30m |

| Going to dinner with friends | 2h |

| Walk the dog | 30m |

| Write a dev blog post | 3h |

"""

# The user now can ask a personal question since the assistant was augmented with the user's data.

user_prompt = """Give me the order of when I should do my activities. \

I want to get the annoying stuff done first, thanks!"""

chat_completion = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": system_prompt},

{"role": "user", "content": user_prompt},

],

)

print(chat_completion.choices[0].message.content)

Run the example

poetry run python gpt_fish/give-a-bot-a-fish-simple.py

This is what the assistant told me:

Sure, here's the order you could do your activities in:

1. Cleaning the house (30m)

2. Walk the dog (30m)

3. Going fishing (1h)

4. Going to dinner with friends (2h)

5. Write a dev blog post (3h)

This way, you can get the smaller and more annoying tasks out of the way early on and save the more enjoyable activities for later.

Pretty good! Without this data (the "fish") the assistant would have no idea what the user's activities are.

"Give a Fish" Use Cases 💭

The "Give a Fish" method is often used to enable assistants to answer questions about any dataset.

For example, what if you wanted to build an app that could answer questions about Wikipedia articles that came out after the GPT model's training cutoff?

The app would work like this:

The user asks a question like: "Who won the 2023 U.S. Open?".

The app would search Wikipedia for this information and find the page for the 2023 U.S. Open.

The app would take the contents of the Wikipedia page and give it to the assistant similar to how we did above.

The LLM can now answer our question since it has relevant data.

Want to try it? LangChain has a Wikipedia integration that makes executing this example easy.

Text Embeddings

Another popular approach in the "Give a Fish" strategy is to store text embedding vectors in a vector database made from your data.

A text embedding model turns text into a vector that represents the semantics of the text.

You can embed the user's question with the embedding model and query the vector database for the most relevant source material. This method's popularity stems from the fact that a user's question may not have a lot of lexical overlap with the actual answers in your dataset, so a typical term search would fail to grab relevant entries.

Once the vector database gets the most relevant source material about your user's question, you simply stuff the context in the prompt like we did with the user's schedule.

"Give a Fish" Limitations

Giving a bot a fish can be great, but the solutions it solves are very static. You have to setup a very rigid system that:

Takes a task as input text

Finds some meaningful content for the given task and stuff it into the prompt

Outputs some text

Step 2 is usually a very specific process like semantic search or including information based on what the user is asking.

Instead of being limited to what we code for step 2, what if we could teach the bot to interact with the world around it? This would create a much higher leverage system where the bot can do anything within the realm of what it has access to.

Teach a Bot to Fish 🎣

Let's say I teach the bot how to use a Google search API, interact with Python REPL, and send emails via API.

Just by teaching it these 3 skills, the bot can now do all kinds of things. Examples:

User: "Find the customer support email address for Apple and send them an email asking how to take a screenshot"

The bot will search Google for something like "customer support email address for Apple" and observe the answer

The bot will send Apple an email for you

User: "What's the world record for the highest jump raised to the power of 0.65?"

The bot will search Google for something like "world record for the highest jump" and observe the answer

The bot will use the math utils in Python to raise the answer to 0.65

The point here is that by teaching the bot how to interact with the world, you create a high-leverage system that can now do exponentially more tasks without you needing to code specific features.

Teaching a Bot to Fish in LangChain 🦜 🔗

LangChain has done the grunt work of setting up the prompts and interfaces for providing an LLM with a set of tools and getting it to enter an action-observation loop by using the tools to accomplish a goal.

Let's try it out by creating a bot that can fulfill this task "lookup the runtime of avatar 2. Convert that to number to seconds and send the result to kocurbrandon@gmail.com".

These instructions assume you followed the setup from the first section

Add LangChain to our project

poetry add langchainThis example requires setting up the Gmail toolkit which is a little involved. See LangChain's instructions here

Add the SerpAPI client

poetry add google-search-resultsCreate a new file for our example:

touch gpt_fish/teach-a-bot-to-fish.pyObtain an API key from SerpAPI (a search API) and add it to your .env file

SERPAPI_API_KEY=<your serpai key>

from langchain.llms import OpenAI

from langchain.agents import load_tools, initialize_agent, AgentType

from dotenv import load_dotenv

from langchain.agents.agent_toolkits import GmailToolkit

load_dotenv()

llm = OpenAI(temperature=0)

toolkit = GmailToolkit()

tools = load_tools(["serpapi", "llm-math"], llm=llm) + toolkit.get_tools()

agent = initialize_agent(

tools,

llm,

agent=AgentType.STRUCTURED_CHAT_ZERO_SHOT_REACT_DESCRIPTION,

verbose=True,

)

agent.run(

"lookup the runtime of avatar 2. Convert that to number to seconds and send the result to kocurbrandon@gmail.com"

)

This code sets up an agent that uses an OpenAI model (gpt-3.5-turbo by default) to analyze a task given a set of tools. I'm using tools that are built-in to LangChain, but you can define custom tools to let the bot interact with anything you'd like. I have provided a collection of Gmail tools, a math tool (it executes math using python REPL), and a SerpAPI tool that allows it to search the internet.

Running the Bot

WARNING: This bot can send emails on your behalf, be careful what you ask it to do. 😬

poetry run python gpt_fish/teach-a-bot-to-fish.py

This next part never gets old. Watch how the agent thinks its way through the problem using the tools we gave it:

> Entering new chain...

Action:

{

"action": "Search",

"action_input": "runtime of Avatar 2"

}

Observation: 3h 12m

Thought: Convert 3h 12m to seconds

Action:

{

"action": "Calculator",

"action_input": "3h 12m to seconds"

}

Observation: Answer: 11520

Thought: Send the answer to kocurbrandon@gmail.com

Action:

{

"action": "send_gmail_message",

"action_input": {

"message": "The runtime of Avatar 2 is 11520 seconds.",

"to": ["kocurbrandon@gmail.com"],

"subject": "Runtime of Avatar 2"

}

}

Observation: Message sent. Message Id: 188f4d12ca3d9f53

Thought: I know what to respond

Action:

{

"action": "Final Answer",

"action_input": "The runtime of Avatar 2 is 11520 seconds. I have sent the answer to kocurbrandon@gmail.com."

}

> Finished chain.

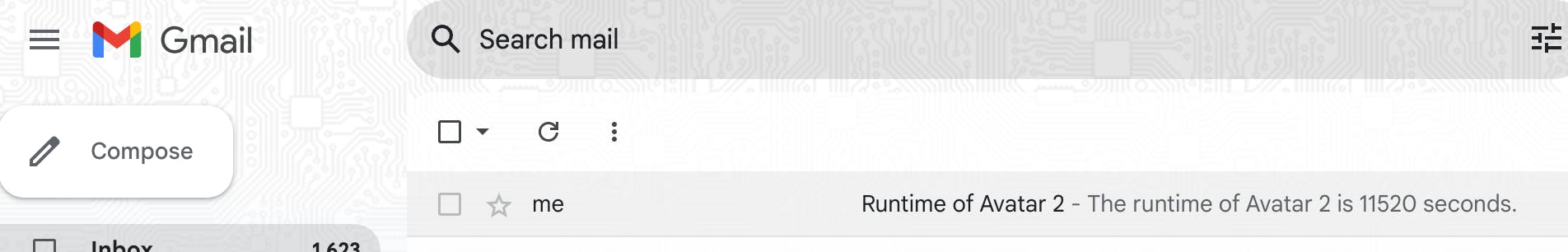

🤩 Low and behold, look what's in my Gmail inbox:

The agent was able to fully understand my request, and orchestrate all of the tools at its disposal to send me that email.

GPT-3.5 vs GPT-4

All examples in this blog used gpt-3.5-turbo. It should be noted that as you build sufficiently advanced agents with the "Teach a Bot to Fish" method, you may notice gpt-3.5-turbo start to struggle. For the most part, gpt-3.5-turbo works just as well as gpt-4 for the "Give a Bot a Fish" use cases. Given the vast pricing differences between these models, this may be an important consideration.

Thanks for reading, and remember to use AI responsibly. 😎 🤝 🤖